RCx, Rack Connect x(lane), is a new intra-rack passive copper short reach, 25 Gbps per lane cabling system that is being driven and supported by a group of next generation Cloud interconnect developers. The 25Gigabit Ethernet Consortium, Ethernet Alliance and soon, maybe other IO interface groups, are supporting RCx which connects ToR or MoR network switches to leaf servers within the same rack only.

RCx signaling complies with the IEEE-802.3by (25GE single lane link) and802.3bj 100GE channel specifications as well as the OIF’s CEI-56-PAM4. It seems that the www.sffcommittee.org maybe creating a new detailed connector and cable assembly specification in support of this effort as many other high speed interconnects have been done.

It’s been about three months since the RCx MSA core organization made its announcement and since then several other OEMs, suppliers and datacenter end-users have joined this group. You can go to www.RCx.MSA.org and read the announcement made back in January including the concept images and power-point presentation. It appears that there is a goal of having products shipped sometime this year. Surely a plugfest certification event will likely be held in the near future to ensure compliance and compatibility of connectors and cables from different suppliers. This best ensures program launch success for a very high volume commodity interconnect. Regardless, beware of non-compliant product with the most attractive pricing and take a close look at the test data of each supplier’s product to determine the best design/cost/performance tradeoff choice for your Rack FRU interconnect system.

Drive by cost needs of 100k cloud server in a single farmIntra-Datacenter and Intra-HPC networks have had various active PCBA circuits and Intelligence embedded within modules and cable plugs like Active SFP28 and QSFP28 Smart interconnects. Various functionality can be had for product ID, security screening, rack/port location, signal conditioning /retiming, heterogeneous active equipment compatibility, compliance histogram and many other purposes or feature sets. Such devices or chips and related software support high-speed interfaces like InfiniBand, FibreChannel & RapidIO. Intel’s Omni Path Fabric Management system is an example. Will the RCx passive two-pin ID system use new RCx specific software for Fabric management different from or compatible with current software offerings? It seems that the associated software programs and tools may have choice implications for dc end-users relative to what interconnect hardware they use.

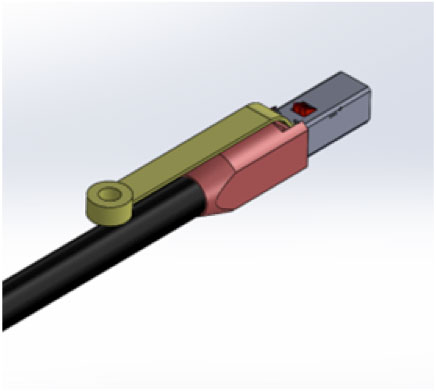

Will RCx production pull-tabs be of the same design or different, thus providing IP opportunities? It would have to be specced out at the right detail level with the receptacle latch-post to ensure compatibility between different sources of cable assembly products. It is ironic how the RCx receptacle and plug connector look a lot like the venerable and similar sized HSSDC-2 connector system that was selected for 1GbE and 1GFC single lane link connectivity back 17 years ago or so.

The number of possible RCx adapter cable assemblies is amazing. Here’s a partial list:

- RCx1 to SFP28, RCx1 to uSFP, RCx1 to SFP56, RCx2 to MiniLink, RCx1 to Slimline, RCx1 or RCx2 or RCx3 to TypeC-USB, four legs of RCx1 to one QSFP28 or to microQSFP, four legs of RCx1 to one QSFP-DD plug, etc.

- Rx4 to QSFP28, RCx4 to uQSFP, RCx4 to one uQSFP56, RCx4 to one QSFP-DD plug, RCx4 to one OCuLink, three legs of RCx-4 to CXP28, etc.

Maybe there will be RCx2 to CAT-8 for some 25GbaseT applications? Keeping track of part numbers should be fun as each cable product can have five different popular lengths. I’ll keep you updated on this development with future blogs, so stay tuned.

Leave a Reply

You must be logged in to post a comment.